Auditory Perception in Psychology (Pdf)

This article is about Auditory Perception in Psychology (Pdf). We start with the basic characteristics of sound, the structure of the ear, and theories of speech perception focus on motor theory. today I will be talking about auditory perception Psychology, you have seen that we have talked about visual perception till now we have talked about the different aspects of visual perception we have talked about how visual perception can be used to recognize objects and how it can be used to interact with the environment and we have seen the different modalities and theories about visual perception as well.

today I will begin talking about auditory perception now the auditory perception is basically hearing okay and hearing also begins with transduction as we saw in the case of visual perception any sensory input that is coming via the senses to the brain that needs to be converted into a representation which the brain can handle and that process is called transduction.

Hearing: The Preliminaries

As far as auditory perception or hearing is concerned sound waves are collected by our ears and they are converted into neural impulses which are then sent to the brain where they are integrated with past experiences whatever knowledge about those kinds of sounds you might already have and then that information is used to recognize or understand whatever that sound is supposed to convey.

now the human here is sensitive to a wide range of sounds ranging from a faint click of a clock to the row of a rock band but the human ear is most especially sensitive to the range of frequencies that coincides with the range of frequencies of the human voice and that you can understand is an evolutionary advantage because the most important stimulus we will be hearing in your lifetimes is people talking and in that sense, you will use that to understand most of what their environmental input will be about.

Now the ear it is basically an organ that detects sound waves much as the eyes detect light energy now sound are generated by vibrating objects say for example if somebody is talking their vocal chords are vibrating or say for example if you pluck a string of the guitar produces the sound that you hear coming out of the guitar now this vibration basically causes this air molecules to bump into each other and produce what are called sound waves and these sound waves travel from the source of the vibration in peaks and valleys like ripples and they expand outward you can actually make this look like say for example if you are putting a stone in a pond of water the stone where the stone has felt will create those kind of ripples and it will be expanding outwards that is much similar to how sound waves really propagate now these sound waves need to be carried within a medium such as an air or water or a metal and it is the changes in the pressure associated with these mediums that your ear is able to detect.

Now let us talk a little bit about the physical characteristics of sound we detect both the wavelength and the amplitude of these sound waves now the wavelength of a sound wave also known as frequency is basically measured in the number of waves that are generated per second this wavelength also determines what is called the pitch of the sound or the stillness of the sound that is the perceived frequency of the sound the longer sound waves will have lower frequency and will produce a lower pitch whereas shorter sound waves will produce higher frequency and have a higher pitch now the amplitude or the height of this sound wave we determine how much energy it contains and in that sense it is perceived by you as the loudness of that particular sound in that sense larger waves are perceived as louder waves now loudness is your experience of whatever sound you are hearing and this experience is quantified in a unit known as decibel zero decibel basically is about the absolute threshold of human hearing.

so anything below zero decibels you cannot hear anything above zero receivable you will start hearing say for example a typical conversation might be around 60 decibels the sound of your breathing is around 10 decibels and so on and so forth it basically increases in 10 volts you have to multiply the quantity with 10 every time you are kind of moving up by 10 decibels.

Structure of the Ear

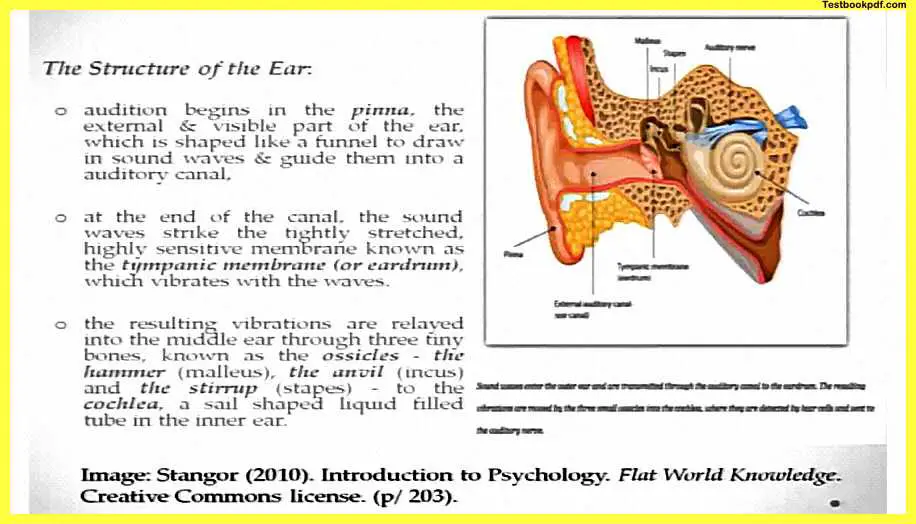

So audition or hearing begins in the external year or the pinna which is the visible part of the year now you can see that this external ear is shaped like a funnel and this funnel is used to draw the sound waves from the outside into the auditory canal.

What is called the auditory canal?

Now this auditory canal at the end of this is a sensitive membrane called the tympanic membrane or the eardrum which receives these vibrations from the external environment now these vibrations once received at the tympanic membrane are conveyed through the middle ear through three tiny bones which are called ossicles and these bones are namely the hammer the anvil and the stirrup these convey these vibrations to the snail’s type structure you can see which is cochlear which is the innermost ear the vibrations cause this oval window of the membrane of the cochlea to vibrate and which disturbs the fluid collection inside the cochlea the movements of the fluid inside the cochlea bends.

What are called the hair cells of the inner ear?

The movement of these hair cells triggers neural impulses so here you can see how the vibration is being converted into neural impulses and then they will be connected and conveyed to the auditory nerve which is connected to the brain which will do the further processing of whatever sound that is received this cochlear contains around 16 000 hair cells each of which holds a bundle of fibers called Celia on its tip and the Celia are so sensitive that they can detect a movement that even pushes them as slightly as by the width of an atom even if you push this Celia e a little bit as much as the width of an atom they will be able to detect it and respond to that.

Two Different Mechanisms

Now the loudness of the sound basically is directly determined by the number of hair cells or a number of Celia that will be vibrating and there are two different mechanisms that have been proposed to detect pitch one theory or the frequency theory proposes that whatever the pitch of the sound wave a proportional number of a proportional corresponding frequency will be sent to the audited air say for example if a tone measuring 600 hertz will be transduced it will be translated into 600 nerve impulses per second but this is slightly impractical because say for example if you have a sound which is of the much higher pitch then the Celia will not be able to convert those kinds of neural impulses.

So alternative solution is proposed that may be to reach the necessary speed the neurons will work together in some sort of volley system in which different neurons fire in sequence allowing us then to detect sounds up to 4000 hertz.

so there might be sections of neurons vibrating at one point and in the other section and so on.

so forth the place theory of hearing the one which i was referring to proposes that different areas of the cochlea respond to different kinds of frequency this might be informative as higher tones will excite areas closest to the opening of the cochlea which is near the oval window whereas lower tones will excite the areas near the narrow tip of the cochlea which is at the opposite end pitch can therefore be determined in part by the area of the cochlea which is firing most frequently another important fact about the placement of the ear around our head is also important to look at.

so that the ears are placed on either side of the head and they kind of give us the benefit of stereophonic hearing which is that you can locate the sound in three-dimensional space if a sound occurs on your left side the left ear receives the stimulation earlier and you will respond by the left ear if the sound happens on the right side you will be able to detect this from the right ear and respond accordingly.

Now although the distance between the two ears is around six inches and sound travels around at 750 miles per hour these time and intensity differences of various kinds of sounds are easily detected when a sound is equidistant from both here say for example if it is in the front or in the back and the distance is equal from both ears then we have slightly more difficulty in pinpointing the exact location of the sound and then we might maneuver our ear position in order to get the best hint of whatever the sound is you might have seen there are certain animals that do that.

Speech Perception

Now the most important part of auditory perception as I said earlier also is listening to speech so we will spend some time here talking about speech perception as a special case of auditory perception now what happens during speech perception is that the auditory system needs to analyze the sound vibrations which are generated by somebody’s conversation, okay so there are few characteristics of speech perception that have been proposed by Matlin.

For example, when you start talking about speech sounds the fundamental unit of speech sounds proposed by linguists and psycholinguists is the term called phoneme and a phoneme refers to any basic sound so say for example if you’re listening to a language you can reduce the entire language into a set of very basic sounds called phonemes say for example if I’m talking about Hindi any sounds like Burma those kinds of things if I’m talking about English then the same kind of sounds like a b c those things can be referred to as phonemes.

Now another important characteristic is when you’re listening to a speech when you’re listening to somebody conversing listeners will need to impose word boundaries because otherwise everything will be jumbled up and you will not really be able to make sense of what message is being conveyed it becomes easier when you reading something on a text and then there are spaces which tell you that the word boundary ends here and the other word begins somewhere there but with the spoken signal but with the speech you have to do it slightly by yourself and that is something which is an important feat that is achieved by humans.

if you kind of want to appreciate how difficult or easy it is you might want to listen to a movie or a song in a language that you do not know at all and then you will see how difficult it is to really generate word boundaries in another thing is that the pronunciation of these phonemes or these basic sounds in speech is a varies tremendously from speaker to speaker and also within speaker from situation to situation.

Theories of Speech Perception

Now an important task of the auditory perception system is actually to understand this variation and still not produce too many different results for the same set of sounds we’ll talk about this in more detail further context also allows listeners to fill in missing sounds say for example if you’re talking to somebody on a disturbing phone line a lot of times you will see that part of the signal will be missing but you’ll still be able to understand what is being said by the other speaker this helps you this you are being able to do this because you have the context information of what the topic is who is talking and all those kinds of information which basically form the context and you use that context to fill up the missing bits of information and still understand the conversation very well the final thing that might be very important from a listener’s point of view.

when talking about speech perception is also the use is the use of visual cues you’re not only listening to somebody from the audition perspective you’re also looking at somebody most of the time when they when they’re talking you’re also you have the input from the lip movement from the gestures and that kind of things and these kinds of information this multi-sensory multimodal information also helps you solve this puzzle of speech perception we will talk about speech perception in more detail now because we are going to talk about the theories of speech perception.

What are the theories of Speech Perception?

Now there are two classes of theories in speech perception of three classes of theories in speech perception the more prominent of them is called the special mechanism approach or also the motor theory of speech perception as it is referred to and it says that speech perception is accomplished by what is called a naturally selected module say that speech perception that this special speech perception module monitors whatever incoming acoustic stimulation that you are receiving and then it reacts strongly.

Whenever the incoming signal contains characteristic patterns related to speech and when this speech module recognizes that an incoming stimulus contains a speech signal then what it does is it preempts or disallows the other auditory processing systems to act on this particular speech signal because the processing that is required to understand speech is slightly different from the processing that is required to understand other kinds of non-speech sounds now.

And this is what it is so the non-speech sounds basically are analyzed according to basic properties of frequency amplitude timber and while we are able to perceive the characteristics of non-speech sounds accurately when the speech module latches on to an acoustic stimulus it will prevent this kind of spectral analysis and it will basically try and understand speech in terms of whatever prior knowledge in terms of linguistic variables that you have and that is more useful in understanding speech information this preemption or this stopping of general auditory processing on speech signal sometimes can lead to what is called a duplex perception.

What is a Duplex Perception?

Under particular experimental scenarios, I want to describe a very simple very interesting experiment to you guys which were done by Liberman and Mattingly in 1989 so what they did was they created artificial speech signals and the speech signals represented phonemes or sounds like da and ga and basically they differ from each other in one sense that there is one part of these sp signals which is flat which is not changing and the other part of the speech signal referred to as the second formant might either be increasing or decreasing so these speech sounds have two parts and I can just show you right here so these speech sounds could be something like a dark would be represented by a flat line and an increasing curve and girl could be represented by a flat line and a decreasing curve the flat line was referred to as base and the increasing or decreasing curves could generally be called transitions.

Now what these people did was they bifurcated the base and the transition and by use of headphones they presented the base and transitions separately to two different ears so what is happening is that in your ear you are still getting the whole signal but you are getting this in the left ear and this one in the right ear now this creates an interesting scenario and the question that one can ask is how would people perceive this kind of stimulus they’re still getting the whole stimulus but they’re getting it bifurcated into two years.

now they found that people were perceiving two different things at the same time in the year that the transition was played into let us say the right ear here they perceived a high-pitched vessel or a chirping sound but at the same time, they also perceived the original syllabus so they are perceiving this chirping sound as well and this whole syllable as well now this was interesting and people wanted to understand why this is happening so Liberman and colleagues they argued that the simultaneously perceiving of transition in two ways as a chirp and as a phoneme it reflects the simultaneous operation of both the speech module and the general purpose auditory processing mechanism which would analyze speech as a non a visual analyzer incoming across sixty minutes as a non-speech stimulus.

Duplex Perception

So what is happening here is this duplex perception is happening because the auditory system could not treat the transition as base as coming from the same source so what happens is it starts to analyze the transition separately using the non-speech analysis and it later at the same time recognizes that no this is part of a larger signal which is the base as well so what it happens is it generates two kinds of results one is that this is listened to as a whistle and the other is that it is listened to as the same phoneme now this was interesting now another important aspect about this special mechanism theory of perception is also as I said that this is also the motor theory of perception.

now it says basically that gestures we were talking about that phoneme is the basic unit of speech perception but they propose differently they say that the gestures that are involved in producing the speech sound should be treated as fundamental units of representation so they are saying that if you want to understand a speech sound you want to understand which vocal gestures produce that sound now what are these vocal gestures so you can see that when you’re trying to speak you’re moving your lips you’re moving your tongue you’re moving your mouth and vocal cords in special ways all these organs are called articulators and specific movement patterns in them lead to the generation of sounds this is what is called a gestural score.

What is a gestural score?

So what this theory is saying is that the motor system when it has to produce any kind of sound basically creates a particular plan of movement of these things that this particular part a will vibrate now and part b will move further so this kind of plan is called a gestural score and the motivator speech perception says if you can figure out this gestural score you can understand what speech is whatever speech is being said you don’t really need to go to the sounds to understand this you just make out what movements are made and that which movement produces what sound and that will help you understand the sound much better than say for example if you go by the phoneme level of analysis.

for example, I can give you an example of the core part of the gesture to produce either d or do if there are two sounds is the same the core sound is the d sound which is produced when you put your tongue at the back of your teeth the other part however your lips move when you’re saying d or do is different.

if you can get this core part all right you will be able to understand which sound has been produced and not be confused by co-articulation effects of the lip movements and those kinds of things rather than trying to map acoustic signals directly to phonemes Alvin Liberman and his colleagues propose that we must map acoustic signals to directly to gestures that produce them as there is a closer relationship between gestures and phonemes they say the relation between perception and articulation will be considerably simpler than the relationship between perception and sound they further say perceived similarities and differences will correspond more closely to the articulatory rather than the acoustic similarity say for example the differences between two acoustic signals will not cause you to perceive two different phonemes as long as the core articulatory gesture is the same another aspect of this theory of perception is the concept of categorical perception.

What is categorical perception?

Categorical perception is that when you are hearing a wide variety of physically distinct acoustic stimuli you will not treat all of them differently what you will do is you will say these set of stimuli belong to one category and these other sets of similarities belong to another category, for example, you might notice that every vocal tract mine and yours are different from each other and they produce different kinds of sound waves so the way I have to say a particular word.

let us say pink or blue or black and the way you will say pink or blue or black or the way somebody else will say pink or blue or black might be very different but you must notice you must have noticed that we treat all of these sounds as very similar and we kind of derive the same meanings out of these sounds even though the physical or the acoustic signatures might be drastically different from each other even say for example within the individual if I am say for example sleepy or if I am very tired or if I am panting and exhausted the kind of speech signal physically that i will produce will be very different but what I do is our what our phonological system does is it is kind of blind to these kinds of physical differences and it will perceive all of these different kinds of signals as an instance of one category let us say if I am talking about the sound pink it can it considers everything else as an instance of the sound p.

Now this is interesting and important in the sense that it helps us understand a wide variety of sounds as one even though the physical signatures might be slightly different further it may be notice as i already said that all of our voices are of different qualities than each other but we again categorize the speech sound coming from each of us as in one category one of the reasons why we are able to do this so easily is that anything that you are saying say for example in a language called english can be broken down into around 40 to 45 phonemes that this language has so anything that is said in any way by anyone can again be mapped down to these 40 or 45 phonemes in addition although the acoustic properties of speech similarly can vary across a wide range our perception does not change in these little little steps ok so we are insensitive to these kinds of variation in the speech signal but if the speed signal now starts changing too much then we will kind of create different phonemes for them and then we’ll start hearing them slightly differently let me take an example of something like this now the only difference between the sounds b and the sound p in english is that b is a voiced sound whereas b is not a voiced sound.

Now the details are not really important here you just have to get the point voice or not wise basically means that even though both of these sounds are technically called labial plosives.

What are labial plosives?

Labial plosives basically mean that you use both the lips to converge and then you block the flow of air and then suddenly there is a burst of air after this burst of air there is either a gap and then your vocal cord vibrates or not a gap and then your vocal cord vibrates when the vocal cord vibrates after a gap this time delay between the vocal cord vibrating and the air burst happening is called voice onset time now as far as b and p are concerned for the b sound the vocal cords start vibrating while your lips are still closed or immediately after but for the p sound the vocal cord starts vibrating after a slight delay after a particular voice onset time now this is the only difference this voice onset time is the only difference between the sounds b and p this voice onset time is a variable that can take any value whatsoever so it can be called a continuous variable.

Now imagine there is the set of sounds on this variable wherein the different voice onset times are possible so how will we perceive these different sounds bp and others if the variables if the sounds b and p differ from each other by at least 20 milliseconds we will listen to them as b and p separately but if the difference is less than 20 milliseconds that is somewhere around 7 milliseconds 15 milliseconds or something like that then we will perceive them as the same sound when we perceive them as the same sounds these different having different acoustic signals they are called as allophones.

Very similar to what will happen is we’ll experience the unit experience the sounds with the range of short VOTs as b anything smaller than let’s say 15 milliseconds or anything longer we can start perceiving this as p now here you could see that the sound system or the sound perception system or the auditory perception system is sensitive to differences but it kind of washes over all the differences that are less than meaningful that are not really that are just variations of different speakers speaking that and those kinds of things it is an important thing because it helps us understand this speech which is produced by a variety of speakers under a variety of circumstances I will like to close here now and will start talking about speech perception or other aspects of the motor theory of speech perception in the next article thank you.

Read also:

Perception and Action Psychology